Introduction

Welcome.

The main purpose of this set of articles is to empower you, the reader, to radically change your development processes. Strap in and hold on, we are about to give you a fast paced tour of some of the Agile Development Techniques available and in common use by some of the most successful software teams. This series of articles will attempt to give the inspiring team an introduction to Agility from a developer perspective.

We focus in this series of articles on what a developer would need to know to start their adventures into Agility we wish to empower the reader with the capability to ask questions, research and form their own techniques rather than following a set in stone Scrum / XP / DSDM style process and trying to meld there development team and environment to fit. The flavour of this series reflects our preference for XP and B/TDD style approach, but we wish to be clear we are not saying this is the only path. It is our experience that the most successful teams out there take Agile at its word and adapt till they have a recipe that works for them.

Although Test Driven Development (TDD) is not alone in the Agile Toolset form a developers perspective it is very central to Agile development, we will therefore adopt an inside out approach by focusing on TDD within this article and talk about how techniques, tools and technologies empower TDD in the modern development environment.

This article will not attempt to explain Agile process as this is outside the scope of this technology centric article.

We urge you to constantly to compare your day to day experiences with the content in this article and to ask a few of Questions…

● Did I have fun today?

● Was I productive?

● Did I empower my team mates?

● Can I do it all again tomorrow?

● Have I moved further in the project journey? a

● Do I feel good about the decisions I made today?

Finally the most important questions …

● Did I do the right thing today?

● Did I build the team?

If the answer is ‘No’ to any of these questions, we encourage you to investigate why and then ring the changes tomorrow, remember nothing is set in stone in the world of Agility. We only follow a course for as long as it empowers our purpose and fits our development shape, everything is fluid and changeable.

Interestingly questions such as…

● Can I deliver on time?

● Was I pragmatic today?

● Am I playing the right requirements?

Are not for the agile developer to consider, they are either team questions or more commonly questions for project managers and stake holders, this is pressure that never belongs with the development. team, pressure of this nature should be considered Toxic.

The agile developer must focus on quality first and for most, or the technical elements of the team will in our experience be bitten later by the compromise the agile developer makes in the name of velocity. As a final note I would just say it is a shame that a lot of teams learn the lesson of quality over progress far too late.

Introducing Test Driven Development

This is a very sensible question, the first question a team asks when the team looks to adopt any new technique is ‘Why?’ ‘What do we gain?’ let me try to provide enough information to encourage you to explore further.Test driving your code base provides a number of benefits including…

Test Driven Development provide safety against unintended change:

Your code is driven from your tests, so providing your tests pass and go green you know the change you just made probably hasn’t broken your code base.

It is substantially easier to pair program if you’re working in a test driven fashion:

This is principally because your coding goals are the scope of the test allowing the pair to establish a rhythm based upon completing a passing test.

We write less code and have to less code to maintain:

Test driven code allows us to keep our maintenance foot-print small by allowing us to only write code which we are driven from our tests to write.

Test driven development allows developers as a team to practice Emergent Design:

The developers only create artifacts and use appropriate patterns within the code base when they are driven to the implementation, by the test they are working on.

A final personal thought, I would say that test driven development, when undertaken with full commitment and adequate skill, empowers developers to write more concise, higher quality, cleaner and easy to maintain code. Test driven development also empowers cohesion between business requirements and the resultant code base otherwise referred to as the end product.

We encourage you to give it a try and watch your team life change for the better.

Concepts

Agile development can be quite scary and quite a large subject. It is our experience that it is important to understand at an introductory level quite a wide number of subjects. To provide a spring board into the Agile development world we have provided concise introductions to many of the concepts it is advantageous for a team to understand prior to undertaking an Agile project.

The following articles are introductions to subjects and the reader is encouraged to use the information contained in the articles to further research Agile Concepts.

Concepts: Test Driven Development

The Need for Quality Requirements

One of the main aims of Iterative Requirement Driven development using techniques like Test Driven Development and Emergent Design is to remove ambiguity from the requirements. There is no room in an Iterative Requirement Driven approach for the developer to add features or code ad hock to deal with edge cases they think might happen but they have no explicit requirements for.

The simple rule with the Iterative Requirement Driven approach is that if it isn’t a written requirement, it isn’t built. Developers do get to propose technical requirements and to raise concerns about edge cases. These can be played as appropriate by the Agile Customer or the Business Analysts and subsequently built by the development team normally during an iteration / sprint planning exercise.

In order for developers to work in such a constrained fashion and to focus their attention on the technical delivery of very granular requirements, the requirements must be of a high quality.

There are a number of approaches that can be taken to achieving high quality requirements, We will focus on one common approach and its component elements in this article, but I do recommend you do read around the subject, a particular good book to start with is…

User Stories Applied, Mike Cohn: http://www.amazon.co.uk/User-Stories-Applied-Development-Signature/dp/0321205685/ref=sr_1_fkmr3_1?ie=UTF8&qid=1362164427&sr=8-1-fkmr3

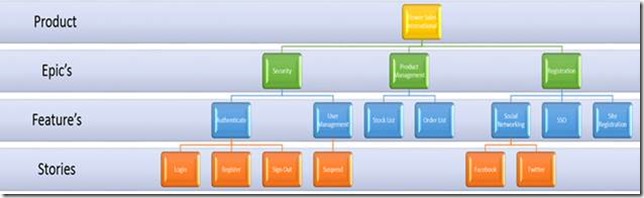

Sample Requirement Tree

Next we will present a requirement structure strategy which has worked on a number of medium to large projects. We will then discuss it in some depth and explain how you can use it to empower your requirement gathering and definition; the end product of which would be a high quality requirement deck for developers and other team members to work with.

Product – Elevator Story

The easiest way to think of the product elevator story is this; what you would tell someone if you had 30 seconds to describe the product and the project or in other words, an average length of a trip in an elevator. This link has some very helpful advice on how to develop an elevator story.

http://www.bizjournals.com/twincities/stories/2002/11/11/smallb2.html?page=all

The use of a high quality elevator story will keep everyone focused, and every decision should be played against the Elevator story as a safe guard for keeping on track. If the decision isn’t playing well against the elevator story then both the decision and the story must be reviewed by the team and alterations made to one or the other or both. A more complex version of this is the use of a Metaphor, the following link discusses the Metaphor concept in more detail.

Epics

The very top level grouping of requirements is called an epic. You can think of this as an area of the product you are planning to build. Documentation at this level usually includes flow charts, product maps, client briefing documents, visionary documents, regulatory papers etc.

Examples of Epics

● Security

● Sales

● Administration

● Accounts

● Stock Control

Features

Features are the next level down from Epics and are grouped into Epics. A feature is the definition of a smaller area of the application this might be a page or a view or it might be a cross cutting concern across the product. Documents at this level tend to include screen mockups, smaller functional flows, visionary documents, guidance documents etc.

Examples of Features

● Login

● Register

● Make online sale

● Display Products

● Search Products

● Add Stock

● Suspend Stock Line

Stories

Stories are perhaps the most important and detailed artefact we have in the requirement deck. Stories live at the bottom of the requirement tree. They can be written in a number of ways, although Gherkin or some type of gherkin derivative tends to be the preferred expression language. Stories typically form work items for developers or developer pairs and are the artefacts played across the story board.

Story format

● Some determinable business situation

● Given some precondition

● And some other precondition

● When some action by the actor

● And some other action

● And yet another action

● Then some testable outcome is achieved

● And something else we can check happens too

An example story wrote using a Gherkin style language syntax, we also see here the use of scenarios

As a Registered User

Given that I am not logged in

And I am at the home page

When I browse to the product page

Then I expect to be challenged for my credentials

Scenario [Valid Credentials entered] …..

Scenario [Invalid Credentials entered …..

Scenario [No credentials entered] …..

Pure Gherkin, Cucumber and text based Behavior Driven Development engines

Another type of layout for story definition is for the business to write “feature” files. These are in effect “Stories”, the feature contains both the definition of the story and the scenarios relevant to the story. The feature file is then executed by the developer and the developer is then lead to write code to satisfy the story. In the .net world Specflow is a common framework used for working in this way. Further information on the Specflow framework can be found at the following link.

http://www.specflow.org/specflownew/

Another alternative is to use the Cucumber framework, this article will show you how to do this

http://gojko.net/2010/01/01/bdd-in-net-with-cucumber-cuke4nuke-and-teamcity/

Additional Requirement Artefacts

There is a common misunderstanding concerning agile methodology and requirement driven development, that to work with these methodologies means ‘No’ documentation, this is simply not true. Please allow me to address this misconception by giving an example of the documentation generated for a typical project.

The project

Let us look at the elevator statement for the project.

Elevator statement: We are developing a web portal to be hosted in the cloud, which must follow regulatory standards and be able to cope with burst traffic up to 1000 hits per hour. The purpose of the portal is to provide real time financial tracking information for trading funds in a graphical format. The data must update every 10 seconds.

Given the above statement and a little forward thinking let us now start to think about some of the documentation we may need…

● We need copies of the regulatory standards that the project must conform to, possibly additional summaries around this documentation.

● We need performance information on the cloud and web based solutions hosted in the cloud so we can start to think about scale

● We need code spikes that test the performance of given architecture. We also need wiki pages documenting the spikes.

● We need Screen mock-ups see balsamiq mocks http://www.balsamiq.com/products/mockups for each layout , design etc of the website

● We really could do with Journeys from multiple Actors perspectives that reflect their imagined path through the application

● We need an Epic, Feature, Story pack for each iteration we will play plus the backlog.

● We need to clarify the Epic’s, features and story’s with attached artefacts such as…

● Detailed screen mocks

● Sample data

● Algorithms to underpin the data visualizations

● Example data visualizations

● Logic flows

● We need to note any risks or unknowns

● We need to start to note none functional requirements and make sure there expressed in the requirement deck

● We will also need step by step QA test plans against each story played

● We will need release documentation

● We will need a deployment plan

● We will need documentation to support UAT and FAT

Some of the above documentation will be drawn up and developed in the initial iterations prior to production start. A lot of the documentation mentioned above will grow as the project progresses and as more requirements are drawn up so more additional artifacts will be required. The main difference between a “Waterfall” style project and an Agile or Requirement driven project is that the latter is iterative. Therefore it is an excepted cultural fact that the documentation set is a living entity, not a static set of documents that forms some sort of initial project sign off set. Further thoughts on the difference between traditional waterfall style projects and agile emergent projects are expressed at this link.

Requirement Workshop Example

This would represent a classic workshop process and final output, typically a lot of this information would be captured on a white board initially and only the end products would be entered into our requirement management system, although various items that provide context, can also be taken from this session and captured.

Epic – Buying Flowers

I want to be able to buy a bunch of flowers on line

Initial Requirement fact statements

This exercise starts the team thinking about scope and what they want to achieve in terms of deliverables.

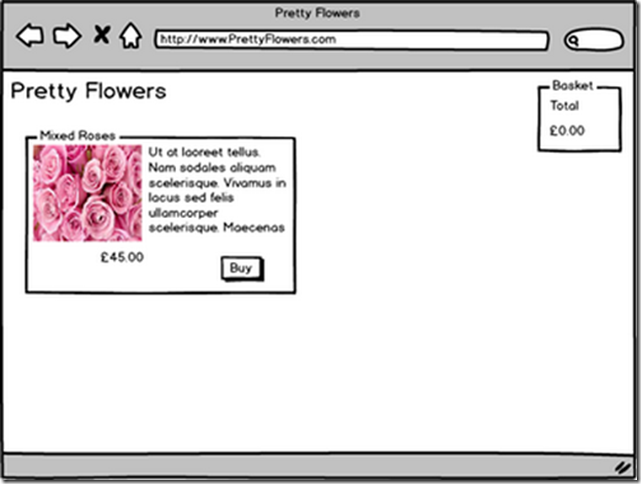

● The user must be able to add a flower product to the basket

● When the user adds anything to the basket, the total in basket displayed on the web page must update

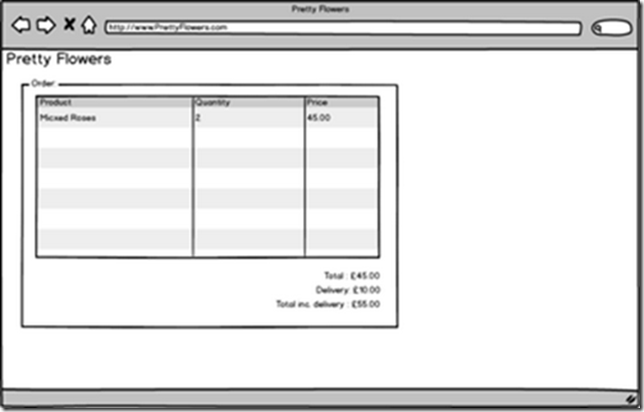

● When a user navigates to check out on the checkout page the user must see all the items listed

● When a user must be able to remove an item form the basket

● When a user removes an item from the basket the total value of the basket shown must reduce by the value of the item removed

Break out the features from the fact statements

Refinement and Breakdown

Customer can purchase flower

Fact Statements

● The user must be able to add a flower product to the basket

● When the user adds anything to the basket, the total in basket displayed on the web page must update

Customer can remove flowers from basket

Fact Statements

● When a user must be able to remove an item form the basket

● When a user removes an item from the basket the total value of the basket shown must reduce by the value of the item removed

Customer can see all their potential purchases on the checkout page

Fact Statements

When a user navigates to check out on the checkout page the user must see all the items listed

Establishing Context

Customer Perspective Buying Flowers: A customer is a user of the system; the customers intent is buy some flowers.

Actor statements

● Sally is a business women and her day is cram packed with meetings she hasn’t had the time to get a gift for her mother’s birthday.

● Sally sees an advert for the flower sales website on the Tube on the way into work.

● Sally opens a web browser and finds the Flower website via Google having remembered the ‘Catchy site name’, Sally decides to send some flowers to her mum for her birthday.

● Sally selects a Premium bouquet of mixed Roses and a Teddy bear and adds then to the digital shopping basket.

● Sally then browses to the checkout page and enters her credit card details.

● Sally payment is authorised, sally receives a confirmation email.

● Sally is pleased when she receives a phone call from her mum to thank her for the lovely flowers.

Screen Mock-up’s

1. Product Selection Page

2. Checkout Page

Example story broken out of the requirement session

Can see a product with a picture and title

In order to choose some flowers to buy

As a Customer

I want to see the product details

Scenario 1: I have browsed to the product page

Given that I have browsed to the Pretty Flowers Web Site

When I open the Products Page

Then I should see a Product

And the product should have a title

And the product should have a picture

And the product should have a description

And the product should have a button labelled “Buy”

Final Thoughts

It should not be underestimated the effort and time required to produce good quality requirements. Initial requirement definitions should only be seen as a starting point, defining requirements is an ongoing activity and developers and other actors within the development cycle will generally require many conversations while building the product outside of initial requirement definition sessions. It is important to understand that the success of requirement driven development and agile practices is dependent primarily on good quality and frequent communication between all the practitioners involved in the project.

http://agilemanifesto.org/principles.html

Due to the cohesive nature of requirement driven development regardless of the project management methodology used, it is vital that requirements are detailed and of a high quality. They also need to be supported with appropriate artefacts. Please also note that it’s also vital that requirements are sized appropriate to the project approach, as a general rule I personally break apart a story into smaller stories if it is estimated to take more than 3 pair days to develop.

“Bad requirements, make for poor quality tests and poor deliveries”

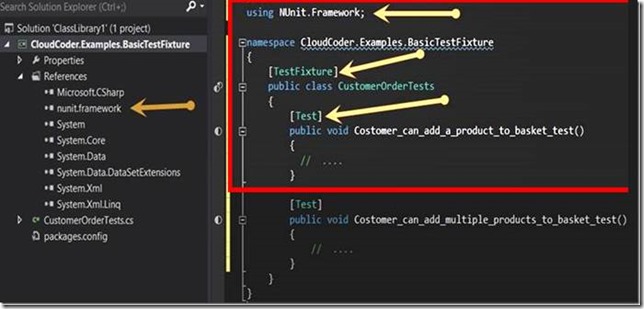

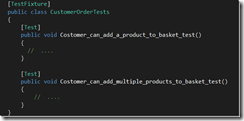

Throughout this article you will hear reference to TestFixtures, So what is a TestFixture?

A TestFixture at its most basic is a C# class which has been decorated with an attribute [TestFixture] which tells the test runner this is a Test container. The test fixture can also contain special methods which are run at the start and the end of the test run. Most teams have a number of TestFixtures and use these to split their tests into groupings.

A Basic TestFixture

The image below shows the basic structure of a TestFixture, you will also see from the image that the NUnit references have been added this was facilitated via Nuget. [http://nuget.org/]. We discuss the other NUnit test attributes later in this article.

Assertions Explained

The main purpose of a test is to assert or verify something; there are three types of tests commonly used…

● Value based tests – This type test asserts that the returned variable is of the expected value or type

● Behaviour verification tests – This type of test asserts that an expected behaviour has occurred as a result of some action

● Schema Verification Tests – This type of test asserts that a given property or attribute has been added to a class [This is advanced and will not be covered in this article for further information see – http://code.google.com/p/cavity/wiki/CavityTestingUnit ]

The main purpose of a test therefore is given a set of conditions you setup in the test and a given action you can assert that something you expect happened or that the value you expected was passed back to your test.

We generally split a ‘value based test’ into 3 parts

Arrange

The code to set up the pre-condition would go into this section as well as setting up any participants within your test such as an ASP MVC controller.

Act

This is where we perform some action against the system under test

Assert

Here we would assert that an expected condition was met.

We would split up ‘Behavior verification tests’ slightly differently

Arrange

The code to set up the pre-condition would go into this section as well as setting up any participants within your test such as a mocked service.

Act

In this section we would perform an action on the system under test

Verify

Here we would verify that some action happened

We will see Assertion in action in our end to end examples a little later in this document but for now I hope this imparts a basic understanding.

When we talk about side effects and Test Driven Development we talk about them from the point of view of test fixtures must be side effect free, so what does this mean?

When we author tests, we must consider that a test fixture must not make a permanent changes to data or environment, so any setup that is required for the test fixture to run is must have its effects reversed again at the completion of test fixture test run.

Fortunately we have a set of special attributes with which we can decorate operations. The operations decorated with the attributes are then executed by the TestRunner at different times in the TestFixture and Test Lifetimes

The attributes the test framework makes available enable the provision of operations for each step in both the Test and the TestFixture Lifetime. Let us now look at these attributes in more detail.

Attributes that effect the TestFixture Lifetime

● TestFixture

● TestFixtureSetup

● TestFixtureTearDown

Attributes that effect the Test Lifetime

● Test

● Setup

● Teardown

Please note that for xUnit and Visual Studio Test Framework a different approach is taken please see these links

Visual Studio Test Framework:

MSTest

http://msdn.microsoft.com/en-gb/library/microsoft.visualstudio.testtools.unittesting.aspx

xUnit

http://xunit.codeplex.com/wikipage?title=Comparisons&ProjectName=xunit#attributes

Since we are focusing on NUnit in this article I will now discuss the effect of each attribute.

● TestFixture

The TestFixture Attribute is used to mark a class as a test suite.

● TestFixtureSetup

When an operation decorated with the TestFixtureSetup attribute is executed by the test runner when the Test Fixture is loaded. The decorated operation will generally perform setup actions which are valid for all the Test’s contained in the Test Fixture. A good example of an object we might initialize in this operation would be any Mock / Fake services required by the Tests.

● TestFixtureTearDown

When an operation decorated with the TestFixtureTearDown attribute is executed by the test runner when the Test Fixture has finished Executing. The decorated operation will contain code to clean up objects and data that might have been used by the TestFixture and any objects set up in the TestFixtureSetup Operation.

● Test

The Test attribute is used to mark an operation as a test, which the test runner should run.

● Setup

The Setup attribute is used to mark an operation to be run prior to running each test in the TestFixture.

● Teardown

The Teardown attribute is used to mark an operation to be run after each test in the TestFixture regardless of success.

When we sensibly implement operations marked with these attributes we are offering a guarantee that our tests are side effect free and anything we do within the test fixture or test, we clean up on completion. This is the definition of side effect free TestFixture, we can say that the test and its effects are atomic.

There are other test attributes such as [Ignore] that are not covered in this section. The full list and explanation can be found at this link…

http://www.nunit.org/index.php?p=attributes&r=2.2.10

“When practicing Test Driven Development or any of the family of requirement driven development practices, it is very important to have high quality highly granular requirements. “

When working in a test driven manner one must have a starting point, good quality requirements act as the starting or entry point. One of the ways we can guarantee very sticky cohesion to the requirements is to use a modern variant of traditional Test Driven Development known as the outside in approach. Let us first look at a more traditional approach to Test Driven Development.

“Traditional Test Driven Development has the distinct disadvantage of needing the developer to interpret requirements at too higher level.”

The statement above are a little vague so let us see a couple of comparisons which will eventually lead to a full understanding of Requirement Cohesion to code.

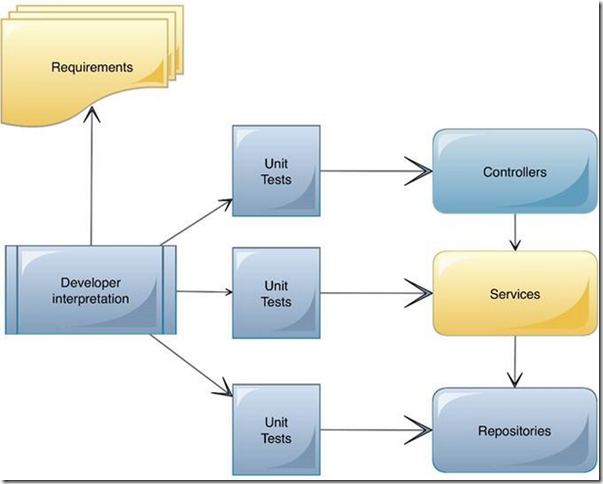

Traditional Test Driven Development

Originally when test driven development first started to appear in developer’s tool sets, the workflow from requirements looked something like this…

● Business Analyst would write a story – Granular requirement

● The developer would pick up the story

● The developer would then decide , ah I need security controller so he would produce a test fixture called SecurityControllerTests and the associated tests to test only the controller mocking or faking out any other dependencies, but driving out the controller.

● The developer would then move to the next layer in the stack and think ah I need a security service and produce a set of tests to drive out the security service and mock let’s say the one dependency left the repository.

● The developer might stop at this point or he might write a set of tests to drive out either the repository interface or the repository and resultant database entities.

Points to note about this work flow

● The developer in this case started above the controller as opposed to above the UI. This is typical in most test driven scenarios. It is perfectly legitimate to either test drive a browser instance to test the UI or to parse the HTML output with something like xPath to check that the right Html has been returned. Both these methods prove very expensive especially if Test Driving on an operation by operation basis.

● The developer wrote the test’s to test each operation on each object in complete isolation from other objects in the code stack by mocking out dependencies.

● The developer interpreted the requirements given in the form of a story and then decided what objects to test drive. Point to note here and this is the potential weakness with this methodology because we do not have tests which mirror the acceptance criteria in the story.

● The result of not having requirement coverage in terms of top level tests is our code has not got cohesion with the original story and thus has no cohesion with the requirements. In simple terms this means that the code cannot cleanly tie back to the Business Analysts interpretation of the requirement but instead is dictated by the developers assumptions.

● The end result of this is that we can only talk in terms of test coverage. Code coverage refers to the amount of code paths that are covered by a test of some description. This however leads to a false feeling of safety as we cannot judge functional coverage.

● Functional coverage is the test coverage of the functionality of the system as opposed to test coverage which is the coverage of the code elements.

● Teams which operate in this fashion tend to need a lot of QA support and quite often end up producing another separate suite of functional tests also known as acceptance tests.

Commentary on Workflow

First of all let me say that there is nothing wrong in working in this way, but that it does not harness the full power and scope of requirement driving your code and having your code under test.

Working in this fashion tends to be quick and allow for rapid relatively stress free production of code. But also is dangerous in terms of wondering from the requirements and writing more code than is required to accomplish the requirements, this in-turn introduces more complexity and a higher maintenance foot print.

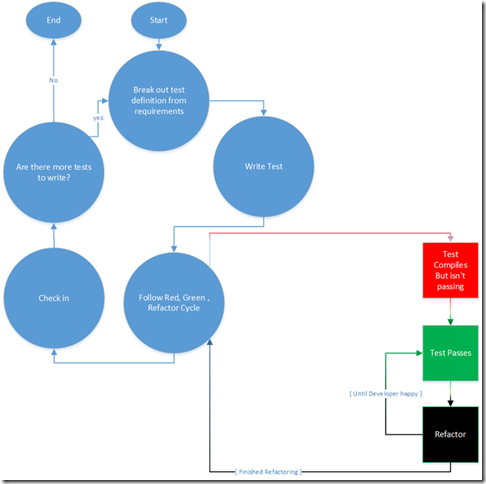

Diagram of Traditional Test Driven Flow and Scope

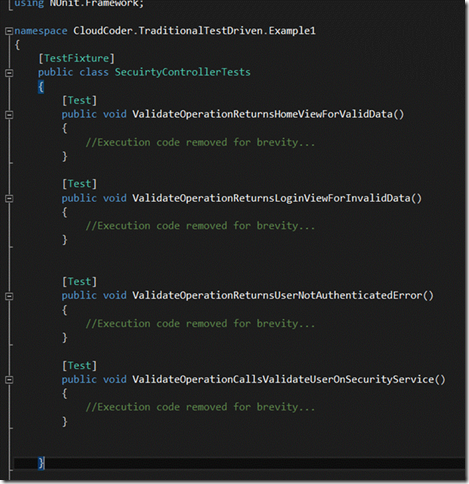

C# example of traditional test definitions

The code snippet below shows a traditional style test fixture. I have removed the operational code for brevity as it is unimportant; the test titles shows the intent of the test fixture. The intent of the test fixture is to drive out the validate operation on the security controller. Eventually this fixture would be expanded to define the full scope of the security controller, at which point it might be refactored into multiple fixtures. The important point here is that the scope of these tests are two fold; first to define the functionality of the security controller and second to establish a relationship with the SecurityService. In both cases the boundary of the test scope is the SecurityController not the overall requirement to validate a user.

Outside in Test Driven Development

“Modeling business requirements as Tests creates cohesion between the originating requirements and the end production code”

Outside in Test Driven Development shares a lot of similarity with Behavior Driven Development , however the most simplistic way to think about Outside in Test Driven Development is as a half-way house between traditional Test Driven Development and Behavior Driven Development. This technique is quite often used by teams who do not have the time or desire to invest In BDD but are very aware that of the weaknesses expressed in the preceding section of a traditional TDD approach.

So what is the difference between traditional TDD and Outside in TDD?

Whereas traditional TDD expected the developer to think in terms of what objects they must create and then to write test which directly test those operations. Outside in TDD urges the developer to think about how to express the requirement and how to write tests that are reflective of the requirement using semantics and fluent language that a business person could understand and could trace back to the original requirements / Story.

Ian Cooper has a good presentation which dives into the depths of why Classic TDD is not always the answer, the video can be found here: http://vimeo.com/68375232 Ian (@ICooper)talks about the Ice Cream Testing Anti Pattern, A little more detail on this can be found on this blog post:http://sk176h.blogspot.co.uk/2013/05/test-pyramid-and-ice-cream-cone-anti.html

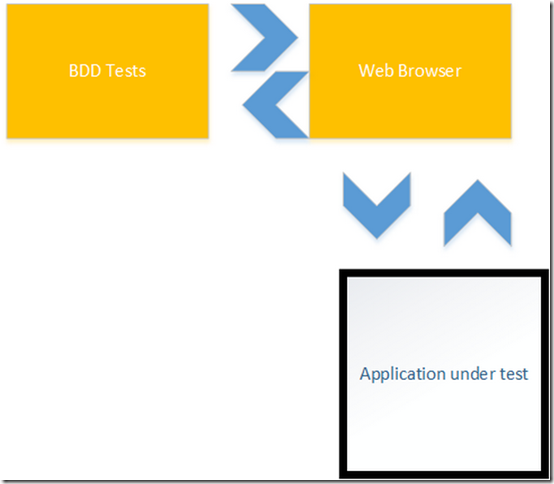

Whistle stop explanation of BDD

The definition above sounds very similar to BDD and in truth the line is very blurred, the difference is really only evident in the implementation, for comparison this article explains BDD from a step by step perspective.

With BDD style approach, if we were building a web application, we would take a whole story as the scope for our test. We would generally utilize a web driver to automate a web browser. The automated web browser would take the same path that a user would take if the user was using the site and executing the functionality expressed in the target story. In pure BDD we would not violate the blackbox nature of the target system, any setup or data required for the test we would facilitate either by using the system directly or by running setup scripts against the data store, this is as opposed to say mocking or faking the data store as we would do with a technique in which we test inside the application black box.

The spirit of BDD is to emulate the final user experience this means that the test framework must only have the same rights, permissions, visibility and access that the intended Actor/ end user would have.

BDD tests must follow the same rules as any other tests, they must be atomic for example they need to set up context for themselves and also tear down context and clean up, leaving no foot print.

Katie’s web cast shows BDD in action with Azure.

Download: http://www.cloud-coder.co.uk/blog/videos/BDDwithStoryQandAzure.mp4

UTube: http://youtu.be/x18Kam2YEEM

Below we see a very simple illustration of the black box nature of BDD in the context of a web application.

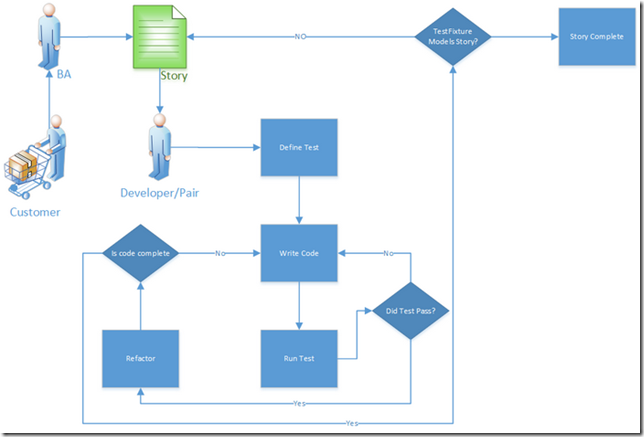

Outside in Test Driven Development, an example workflow

Let now look at what an example work flow might look like for Outside in Test Driven Development…

● Requirements are gathered

● Epics, Features and stories are fleshed out for the iteration

● Sprint or Iteration planning meeting is run, stories are estimated etc.

● A Developer / Pair pick up a story

● The developer decide if the story is too large to fit into a single Outside in test, In this case it is

● The developer/Pair break apart the acceptance criteria and find the first business requirement

● The developer/ Pair model the business requirement as a test giving to a fluent and descriptive name

● The developer/ Pair decide to run the test form above the controller not above the view

● The developer/ Pair use the test to drive out the whole stack this includes a controller, service, repository and mocked data provider

● The developer/ Pair perform similar operations until the target TestFixture covers all the business functionality expressed in the story.

● The story and TestFixture is complete *note: TestFixtures often span multiple stories, for this example we have not modeled this.

Diagram of Outside in Test Driven Development Flow and Scope

We can see in the flow above that the story drives the developer/ pair, it is developer’s job to faithfully represent those requirements in their tests. By the use of Emergent design principals which we talk a little about later in this article it is easy to see how we can drive the code stack from the originating requirements while providing a mirror for those requirements in our test definitions.

C# example of outside in test definitions.

The image below shows how the tests might be written from a business perspective and do not mention in their definitions any component or technology artifact, if you compare this to the Traditional Test Driven code example the difference in approach and intent, will become clear.

“Developers should use every technique available to make their code follow defined business requirements, it is not a developer’s job to invent business requirements or scope, the scope of our creativity lies with the technical implementation of others dreams.”

Emergent design works with the principal “You aren’t Going to Need It”, we mean by this that you should not write a line of code unless you have been driven by requirement or essential refactor to need the line of code. Emergent design takes this to a new level. Emergent design when implemented in a pure form says the following…

● Do only enough architecture up front to enable you to write your first test

● Make iteration one a productive iteration

● No architecture survives a project life time and remains unchanged

● Allow the code to guide your architecture

● Only write code that your tests drive you to write *note there are exceptions if testing below the UI

● Make your development process an organic experience allowing your code to grow under the guidance of tests

● Nothing is written in stone , code is good enough to get you from the point when it is written till the point where you need to change it

● Building applications is a journey of continual transformation , not only in terms of thought, technique, understanding and practices but also in terms of the codebase and the architecture as we learn and encounter it evolves.

● Architecture and code is fluid, it is owned by all its creators not by the individual and anyone has a right to make a change to it, if it no longer fits.

Above we have made some powerful statements, but on a day to day basis what is Emergent Design? I have written a few articles which I believe illustrate Emergent Design the links can be found below…

http://www.cloud-coder.co.uk/blog/index.php/2012/11/emergent-design-overview/

Although this section has been brief, Emergent Design is essential to the techniques covered in this article, I highly recommend you read the links I have supplied above and that you have an open mind to emergent design which is for a lot of people a new concept, while considering the rest of this article.

Using Test Driven Development to explore complexity

Software development can quite often deal with complex requirements these can be thought of as falling into two categories.

● Business

● Technical

There are a number of ways to model these requirements, quite often the business requirements are complex in nature even when broken down into story size.

One of the ways Test Driven Development can help in this scenario is to provide a way to break out complexity into smaller parts until the requirements model simple steps.

Let us take for instance calculating Roman Numerals, our fist approach might be to try to write tests for each of the rules for converting Roman Numerals to Decimals. We could use the Internet to find these rules and then it would be a simple task to write our conversion code, in fact this URL will show us how…

http://www.periodni.com/roman_numerals_converter.html

However this is not the Test Driven Development approach, with this approach we would start by writing a test to convert the number 1 to I and then writing tests to drive out conversion for all units up to 9 to their Roman numeral equivalents. What is important is that we would do the simplest thing to make each test pass, we then wait to get driven to implementing the conversation rule.

When we work in this fashion we are able to subdivide complex problems into smaller more understandable problem sets, as we then build on top of our smaller less complex test units we expand our understanding of the larger problem set.

The most noticeable situation which sticks in our mind was a few years ago we were asked to produce a TV scheduling application which consumed a complicated XML feed. We could have handled the complexity by trying to model the feed in a spread sheets or spent hours of head time thinking and discussing how to handle the issue. The approach we actually took was to write a test which dealt with 1day, 1hour, 1 program we then built up from this test till we had covered the total sets of requirements.

When we need to break apart technical complexity we can use Mocking to replace elements of the technical stack and outside complexity with simple mocked objects.

The Red, Green, Refactor principal is almost a creed amongst Test Driven Developers it refers to the life cycle of a test creation. We will try to explain each step in the creed…

● Red – Red refers to when you have written enough code in your test to get the test to build, the test runner will show a red bar for the test as the assertion in the test does not yet pass or in other words go green.

● Green – Green refers to when you have written an enough code doing the simplest thing possible to make the test pass, the test runner will then go green.

● Refactor – Refactor is the activity of going back and iterating over your code to improve the quality of the code you can now safely do this because you have test coverage developed by the first two steps Red and green.

Test runners such as Resharper have a colored bar that when you run a test suite will indicate if the test was successful by turning Green or if it failed by turning Red. Resharper and other test runners generally also have traffic lights or ticks/crosses next to each test in the test runner display to indicate which ones passed and which ones failed. It is form this form of visual display that we derive the first part of the creed Red, Green.

Refactoring is the action of making the code better without changing its function, there are a number of techniques we can apply when looking for and fixing sections of code which need to be refactored.

● DRY – Don’t Repeat Yourself – this basically says if you have duplication in your codebase refactor and remove it

● Long methods are bad – If your method has too much code in it, try to apply SRP – Single responsibility Principal, SRP says that a unit of code should do one thing and one thing only.

● If you have a class or operation which is quite large and has a set of related logic or related methods – consider SOC – Separation Of Concern, SOC says that if you have a related group of code separate it into its own section in our case this would be into a separate class more than likely.

● DI -Dependency Injection- dependency injection basically says that you should surface you dependencies so instead of creating an instance of say a service inside a controller, instead you pass in an already created instance by interface.

● Low Cyclic Complexity – In simple terms this refers to the number of branching or looping code paths inside our methods, Wikipedia explains LCC in more depth http://en.wikipedia.org/wiki/Cyclomatic_complexity In practice this means refactor out imbedded logic and imbedded loops normally these can be pulled out into a separate method or a well-structured piece of LINQ http://msdn.microsoft.com/en-gb/library/bb397676.aspx.

● Semantic method names – Make sure your method names say what they do, do not worry about being concise.

There are a number of other principals that can be applied I would highly recommend the following book…

Clean Code – Robert Martin

One small note on refactoring, Resharper is simply a must, the functionality within Resharper make refactoring quick and significantly more risk averse.

Concepts: How to be Brave

Katie’s webcast on being brave click to open

Download: http://www.cloud-coder.co.uk/Blog/videos/AgileTeamsBeingBold.mp4

UTube: http://youtu.be/o05kiiBfa1M

“Collective code ownership, is the practice of empowering each team member to feel code ownership and to make sure they know they have a right and a responsibility to make changes as needed to the code base, this is a very important principal for teams practicing emergent design and agile development in general”

“The perfect process is only perfect while it fits the team, project and situation. It is necessary to embrace continuous change in order to keep our process pallet fresh and relevant, it is each team member’s responsibility to ring the changes as necessary.”

“When a tool becomes a hindrance to the team or the project, change it”

To be able to work in an emergent fashion in terms of architecture and code the team need to feel empowered. Being brave in effect means that as a team each member needs to feel empowered to do what they feel is right at any given moment inside the codebase and the team process pallet.

There are several systems which we can use to empower a team to feel safe to make changes to the code base without team level consultation. To provide this fluid environment that allows the individual to safely embrace principals like “Being Brave” we must supply Test Driven and change controlled environment. It is imperative that the feedback loop is quick so that good code is not built on top of bad code blindly, this is where Continuous integration lends a helping hand.

The most important step for a team wanting to implement reliability in their source code ownership, is to implement a source control system. A good quality source control system will safeguard your source code and allow you to recover from disasters and to roll back to a stable state should code be submitted that “Breaks your build”.

A Good source control systems also use the concept of Branching to allow teams to work on a version of the code base which was cloned from the production version but is disconnected. The Branched version can then be merged back into the production version once the code on the Branch has been verified.

It is important that the source control system you choose has seamless integration with both your Operating System and your IDE in this case Visual Studio. Using the integrated tool suites it is easy for your team to look at changes not yet committed and to compare change sets previously committed. Additionally most integrated toolsets include a traffic light system which at a glance tells you if you which files have pending changes.

One of the truly awesome features of good source control systems is their ability to present conflicting changes to the developers so that they can prevent code loss when working on the same file.

Source Control Providers and Tool sets

I am not in this article going to try to cover all source control systems but I will supply links to further information about some of the most popular and most stable products. Before we look at the available options let us first look at the some of the requirements we need from a good source control provider.

● Your selected Source control provider must enable its data to be backed up and restored

● Your selected Source control provider must provide seamless integration with both your

● Operating System and your Integrated Development Environment.

● Your selected Source control provider must have reliable merge and rollback tooling

● Your selected Source control provider must be run on adequate hardware

● Your selected Source control provider must support Branching

● Your selected Source control provider must be reliable

Having considered the above statements I would like to now provide some starting points for your investigation in to the selection of your Source control provider and companion tooling.

Subversion [SVN]

SVN has been around for a number of years and is now very mature and has fully integrated tooling it is a truly excellent choose as a source control provider for software teams, it is a low friction offering in terms of adoption and has a vast array of tooling. It’s also worthy of note that a lot of companies provide online SVN repositories at a relatively low cost.

Recommendations for Hosted SVN Services

● Unfuddle [https://unfuddle.com/]

● Codespaces [http://www.codespaces.com/]

Recommendation for Tooling for SVN

● SVN [http://subversion.apache.org/]

● VisualSVN Client – Visual Studio integration Addin [http://www.visualsvn.com/]

● VisualSVN Server – Easy to administer and install SVN version [http://www.visualsvn.com/]

● SilkSVN – Client SVN command line tooling [http://www.sliksvn.com/en/download]

● Tortoise SVN – Windows SVN Client integration [http://tortoisesvn.net/downloads.html]

Final Thoughts

SVN is my go to choose for teams who either are not familiar or do not need the distributed nature and power of GIT or are not tied to Team foundation Server due to their Application Lifecycle Management Chooses.

Git

Git is a distributed source control provider , in simple terms this means that a developer can perform a local checkin as there working on their code and therefore have the benefits of source control on their local machine and then when they are happy with their code they can perform a “Push” to the central server. The use of Git comes at a steep cost in terms of learning curve, Git is not straight forward in comparison to SVN and teams will spend a lot of time working on the command line when working with Git. However it is an awesome version control system and well worth the effort to learn in depth.

Recommendations for Hosted Git Services

● GitHub [https://github.com/]

Recommendation for Tooling for Git

● Github client for windows [https://help.github.com/articles/set-up-git]

● Git for windows + msysGit [http://msysgit.github.com/]

● TortoiseGIT –Windows Intergration [https://code.google.com/p/tortoisegit/downloads/list]

● Git Visual Studio Plug in [http://visualstudiogallery.msdn.microsoft.com/63a7e40d-4d71-4fbb-a23b-d262124b8f4c]

Final Thoughts

If your team has the time and is prepared for a few false starts in my personal opinion Git is the route to go, but please be aware there as mentioned above is a steep learning curve.

Team Foundation Server

TFS is outside the scope of this article, as implementing TFS is a large investment in a full ALM [Application Lifecycle Management] system and process. To fully implement TFS requires specialized consultancy and a lot of planning, getting TFS wrong can be very expensive for a team. In my opinion TFS is not a good first point of contact in terms of source control for beginners to TDD / Agile unless appropriate guidance is on hand.

TFS Links…

http://msdn.microsoft.com/en-gb/vstudio/ff637362.aspx

In this section I will make some suggestions about practices as a team you might wish to discuss and modify to suit your own team principals. The suggestions in this section are based upon moving at speed in a pair programing, Requirement Driven Agile Environment while maintaining code safety.

Build Process

It is vital that the team has a solid build and build verification process, we can facilitate this in part by the implementation of a Continues Integration process, let’s now look at what this might look like and some of the candidate technologies chooses you might make to put CI in place.

Continues integration Explained

When a developer / Pair works solo on a code base they are working in an isolated environment were the effects of their changes cannot effect anyone else and other peoples work will not affect the solo developer /Pair. The solo requirement driven developer’s / Pairs workflow is likely to consist of the following steps if they are following TDD or a similar set of techniques.

Diagram of typical Solo / Pair development steps when using TDD or similar variant

● Developer / Pair picks up a set of requirements

● Decides on the first test to be written

● [RED] Write enough code to get be able to build

● [GREEN] Makes test pass doing the simplest thing

● [REFACTOR] Perfects and changes the code to give higher code quality and remove duplication, but not alter functionality

● Checks in Code

● Repeat Cycle till all requirements built

The flow above shows the solo developer writing a passing test and refactoring the code till he is happy he can do no further practical good by continuing to refactor. The developer is then happy with the code base and the test and checks into their source control system and moves on to the next set of requirements. This cycle is very effective for the solo developer or the developer pair however as stated above it is not taking into account multiple developers / Pairs working on the code base at the same time for this we need a more complex cycle.

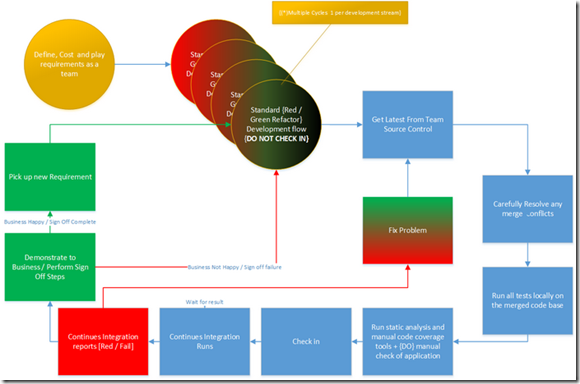

Automated build and test run

However larger projects can be considered team undertakings and as such we need additional steps within the development flow, the new combined flow must take into account the following considerations.

● Conflicting changes in different developers code – Merge

● Code checked in since the developer checkout that breaks new unit tests – Automated unit test run

● Change sets that conflict and break the build – Automated build

● Code quality considerations – Code static analysis

● Continual delivery

When working in in a team context we must always consider the people we are working with this leads to changes in our development flow, unfortunately most of these changes cannot be automated or policed by automated systems, for this reason discipline both for at a team level and at an individual / pair level is very important in this scenario.

Diagram of typical team development steps when using TDD or similar variant

One of the controversial techniques regularly used when practicing Requirement Driven Development in an agile environment is pair programming. So what is pair programing?

Pair Programming Is…

● A collaborative experience

● A practice which extends the productivity of the developers involved by sharing the work load and keeping the programmers productive

● fast paced

● focused on quality, with continual review of the work being performed

● A methodology that which has a focus on knowledge sharing

● Involves 2 people splitting what would be normally be seen as one person’s work

● Has no room for ego or elitism

● A team sport

● A practice that requires discipline

● A practice that requires the correct environment to be successful

Pair Programing can be…

● Fun

● Challenging

● Exhausting

● Intimidating

● Revealing

● Initially considered expensive by business

Pair programing is when two developers “Pair” on a…

● Story: Granular Requirement

● Bug: Issues raised from some form of testing

● Spike: Experimental development to try or test a framework, idea or assumption

In a pair scenario we would expect to see 2 developers gathered around a large desk both sitting comfortably, there would be a lot of communication taking place between them. We would also see each developer would have a screen each and both screens would be mirrored and set up for the optimum height and comfort of each developer. Each developer would have their own Keyboard and mouse and a comfortable share of the desk space.

When developers engage in pair programming, we would expect to see them start programing together at a given time agreed by the both developers. The developers would break for lunch at the same time and return at the same time from lunch. The developers will finish their pairing time at the same time and that would be all the work performed on the collective codebase by either developer till they meet to continue pairing the following day. During the business day only 70% of the available work time should be scheduled for pair programming, the rest of the day should be used for Stand up meetings and breakout sessions for checking email and personal research etc.

One of the terms you might hear used in reverence to someone who has been practicing Test Driven Development using empowerment tools such as Resharper for a while is Keyboard Ninja. A keyboard ninja is someone who doesn’t need a mouse and is really fast and accurate with keyboard shortcuts both within Visual Studio and when using the Addin Resharper. The keyboard ninja thinks at the speed of light, their fingers fly across the keyboard and they are highly productive.

Tools

We will now take a quick look at the tools required for Test Driven Development in .Net. Some of these tools you will already have installed others might be new to you, I will give a brief description and URL for each tool.

Tools: Environment

We really only have one serious option for working with the .net stack and that is of course Microsoft Visual Studio Premium and above. This is an excellent IDE but it lacks some features which empower deep refactoring and true Test Driven Development, so to compliment Visual Studio we will be using the Resharper add on.

Visual Studio can be obtained from: Visual Studio 2012 Overview | Microsoft Visual Studio

Pricing is quite often more economical if purchased as a MSDN subscription.

Resharper is considered by agile .net professionals as an essential plugin for visual studio. Resharper adds a varied array of essential features including…

● Navigation features

● Refactoring features

● Test runner

● Code Compilation

● Advanced code analysis

● Fully configurable code standards

● Centralized Team Settings

● Code visualization features

Arguable the most powerful feature of Resharper is the keyboard enabling of features, most developers use a combination of Resharper and Visual Studio to become “Keyboard Ninja’s”. Developers who reach this level of expertise are as a general rule inherently more productive due to their utilization of the keyboard and Resharper, This enables them to focus their whole focus on thinking about the code and not dealing with the mouse or trackball and perhaps more importantly menus and context menus.

Resharper can be obtained from: http://www.jetbrains.com/resharper/

Tools: Frameworks

The idea of a test framework is many things to many people and to some it will be a totally new concept, in this section I am defining a test framework as being a library which enables you to write assertion based code inside Unit and Behavior tests written in .Net code. With this definition in hand please let me briefly outline some of the available options.

Visual Studio Unit Test Framework

Information on Visual Studio Unit Test Framework and Tooling can be found at: http://msdn.microsoft.com/en-us/library/ms379625(VS.80).aspx

The Unit Test framework which ships with Visual Studio is now highly mature and quite stable. If you are a Microsoft developer who wants to keep away from open source offerings then this is your best and really only option.

The Framework is somewhat limited in its scope but with a little imagination is fully functional. The framework is highly integrated with Visual Studio IDE and makes use of the Visual Studio Test Runner.

xUnit

Information and downloads for xUnit can be found at: http://xunit.codeplex.com/

The xUnit framework is arguably the most complete and modern of the available Unit Testing frameworks currently available in the .Net space. The xUnit framework is incredibly powerful however it is my personal belief it is not the best framework to cut your Unit Testing teeth on. To leverage the real power of the xUnit framework it is recommended that you do additional reading about xUnit once you understand the principals of Unit Testing working with simpler frameworks such as NUnit.

On a final note the Resharper test runner will not work with this framework out of the box you need to follow these directions: http://xunitcontrib.codeplex.com/

NUnit

Information and downloads for NUnit can be found at: http://www.nunit.org/

● NUnit was one of the original Unit Testing framework, NUnit is very straight forward to learn and implement. NUnit will run with all common test runners and additionally has its own test runner available.

● NUnit while not as feature rich as xUnit is my personal choose of testing frameworks when working with teams who are new to Test Driven Development.

● Resharper supports NUnit out of the box and the combination of the Resharper test runner and NUnit is potent to say the least.

● Within this article we will be targeting NUnit as Unit Testing frameworks although the advice and concepts will translate to other testing frameworks.

StoryQ

Information and downloads for StoryQ can be found at: http://storyq.codeplex.com/

● StoryQ is a Behavior Driven Development framework, StoryQ allows you to define a story and to break the story into step’s, each step we define a code fragment to implement either setup or assertion code. StoryQ allows the developer to work outside in and make knowledgeable choices as to how they compromise the black box nature of the system being tested.

● In the web world you often see frameworks such as StoryQ combined with Web Driver frameworks such as Selenium Web Driver: http://www.nuget.org/packages/Selenium.WebDriver/ these frameworks are used to drive the browser to replicate the user experience and act as a test execution engine.

● StoryQ additionally facilitates the writing of multiple scenario’s and their associated test code under a given story. The ability to model different scenarios allows for the development of happy, sad and edge case paths to be tested as a block, this in turn allows us to fully encapsulate and model a requirement.

The following post provides a step by step overview

Test Runners

The tests we write when undertaking Test Driven Development are written in a .Net class library and there for we cannot run them as we would with a web site or an executable. We therefore need a tool to execute the test assembly and action the tests we have written. Test Runners fall in to three categories…

● Console Test Runners – Programs executed on the command line and by the Build / Integration server or other automatic process

● Test Runners with Separate User Interface – Programs that load the test assembly outside of visual studio

● Test Runners that work within Visual Studio – Addins that can be run directly as part of the development experience without needing the developer to leave Visual Studio

Gallio

Information on Gallio can be found at: http://www.gallio.org/

Gallio supplies all 3 variants of testing tools Console, UI, and IDE integration. Gallio works with virtually any common testing framework and is considered by many including myself the ultimate test tool for running automated tests and for continues integration scenarios via its console “Echo” Test Runner.

TestDriven.net

Information on TestDriven.net can be found at: http://www.testdriven.net/quickstart.aspx

TestDriven.net is a widely used Test Runner, it integrates fully with Visual Studio and is capable of running most popular testing frameworks including MSTest. However it does require special efforts to run xUnit tests – the support for running xUnit is available from the xUnit installer.

TestDriven.net adds support for testing into the output window in visual studio, the error panel and context menus.

This is an incredibly popular Test Runner and in a lot of software consultancies and teams it is considered the standard.

Resharper

Resharper can be obtained from: http://www.jetbrains.com/resharper/

Resharper is equipped with an integrated test runner, Resharper is the chosen tool of a large percentage of .net agile professionals and in there and my opinion the Resharper test runner with its Visual Studio integration is the best tool for the job.

Finally

Elevator Stories

http://www.bizjournals.com/twincities/stories/2002/11/11/smallb2.html?page=all

Agile versus traditional Architecture

Specflow

http://www.specflow.org/specflownew/

Cuke4net

http://gojko.net/2010/01/01/bdd-in-net-with-cucumber-cuke4nuke-and-teamcity/

Cavity Unit Test Framework

http://code.google.com/p/cavity/wiki/CavityTestingUnit

Agile Manifesto

http://agilemanifesto.org/principles.html

Emergent Design / Architecture

http://www.cloud-coder.co.uk/blog/index.php/2012/11/emergent-design-overview/

BDD in action

Cyclic Complexity

http://en.wikipedia.org/wiki/Cyclomatic_complexity

Visual Studio Test Framework – MSTest

http://msdn.microsoft.com/en-gb/library/microsoft.visualstudio.testtools.unittesting.aspx

xUnit

http://xunit.codeplex.com/wikipage?title=Comparisons&ProjectName=xunit#attributes

NUnit Ignore Attribute

http://www.nunit.org/index.php?p=attributes&r=2.2.10

Hosted SVN Solutions

Unfuddle https://unfuddle.com/

Codespaces http://www.codespaces.com/

Git Client Tools

Github client for windows https://help.github.com/articles/set-up-git

Git for windows + msysGit http://msysgit.github.com/

TortoiseGIT –Windows Integration https://code.google.com/p/tortoisegit/downloads/list

Git Visual Studio Plug in http://visualstudiogallery.msdn.microsoft.com/63a7e40d-4d71-4fbb-a23b-d262124b8f4c

Git Hub

Team Foundation Server

http://msdn.microsoft.com/en-gb/vstudio/ff637362.aspx

Resharper

http://www.jetbrains.com/resharper/

Visual Studio Unit Test Framework

http://msdn.microsoft.com/en-us/library/ms379625(VS.80).aspx

StoryQ

xUnit

Selenium

http://www.nuget.org/packages/Selenium.WebDriver/

NUnit

http://www.nunit.org/index.php?p=runningTests&r=2.6.1

Gallio

Testdriven.net

http://www.testdriven.net/quickstart.aspx

Books

User Stories Applied, Mike Cohn

Clean Code – Robert Martin

Katie-Jaye has worked on a number of Agile projects as a Coach, Developer and Agent for change. Katie-Jaye is an active speaker both in the UK and USA at user groups and events. Katie-Jaye has worked on software for end customers such as Toyota, Coca-cola, Elateral, Enta Ticketing, Thoughtworks, Dot Net Solutions, Global Radio, Solidsoft and The Carbon Trust. Katie recently set up the Windows Azure inside Solutions group and architected a huge Azure project for the administration of pharmaceuticals. Katie lives in Brighton in the UK with her partner and 2 cats.

Katie’s Blogs:

Http://www.Katiejayemartin.com

http://www.cloud-coder.co.uk/blog

Katie’s Twitter:

@Martindotnet

Linkedin:

Http://uk.linkedin.com/pub/jaye-martin/23/834/881/

Katie’s Email: